The No Artificial Intelligence Fake Replicas and Unauthorized Duplications (No AI FRAUD) Act is a piece of legislation introduced in the U.S. Congress by a bipartisan group of lawmakers. The Act aims to provide federal protections for individuals’ voices and likenesses against misuse by artificial intelligence (AI) technologies.

Key Provisions for the No AI Fraud Act

The No Artificial Intelligence Fake Replicas and Unauthorized Duplications (No AI FRAUD) Act is a piece of legislation introduced in the U.S. Congress with the aim of providing federal protections for individuals’ voices and likenesses against misuse by artificial intelligence (AI) technologies. Here are the main components of the Act:

- Federal Property Right for Likeness and Voice: The Act establishes a federal property right for an individual’s likeness and voice. This means that individuals have the right to control the use of their identifying characteristics and can enforce this right against those who facilitate, create, and spread AI frauds without their permission.

- Punitive Damages and Attorneys’ Fees: The Act provides for punitive damages and attorneys’ fees that can be awarded to injured parties. Those distributing misappropriations knowing their lack of authorization would similarly pay the injured parties.

- First Amendment Defense: The Act acknowledges a First Amendment defense that requires a court to balance the IP interests against public interest in the unauthorized use. Factors for consideration include whether the use is commercial, its relevance to the primary expressive purpose, and its impact on the economic interests of the rights owners.

- Impact on Businesses: The Act is expected to have a significant impact on businesses, particularly in the creative industries. It requires businesses to ensure that their use of AI technologies respects the rights of individuals to their voices and likenesses. This may require businesses to implement new procedures and safeguards, which could entail additional

The No AI FRAUD Act seeks to establish baseline federal protections for all Americans against the unauthorized use of their voices and images by AI. It gives individuals the right to control the use of their identifying characteristics and empowers them to enforce this right against those who facilitate, create, and spread AI frauds without permission. The Act recognizes that every person has a property right in their own likeness akin to other forms of intellectual property rights.

In addition, the Act allows for punitive damages and attorneys’ fees to be awarded to injured parties when their voice or image is misappropriated. Those who knowingly distribute such AI frauds without authorization would similarly be liable to pay damages under the Act. However, there is an important First Amendment defense written into the legislation, subject to factors such as commerciality, relevance to the primary purpose, and whether it competes with the rights holder’s works.

Impact on Businesses

The No AI FRAUD Act is expected to have a significant impact on businesses, particularly in the creative industries. The Act is seen as a necessary step to protect individual creativity, preserve and promote the creative arts, and ensure the integrity and trustworthiness of generative AI. It is also expected to foster a landscape where AI aids human creativity without diminishing the value of individual identity and expression.

The Act has been welcomed by many in the music industry, who have been directly affected by the misuse of AI technologies. For instance, there have been incidents where AI was used to create and distribute songs using the voices of popular artists without their consent. The Act is seen as a meaningful step towards building a safe, responsible, and ethical AI ecosystem.

However, the Act also poses challenges for businesses. It requires them to ensure that their use of AI technologies respects the rights of individuals to their voices and likenesses. This may require businesses to implement new procedures and safeguards, which could entail additional costs.

How Resemble Detect fits into the Act

The recent introduction of the No AI FRAUD Act in Congress aims to provide federal protections against the misuse of artificial intelligence to create unauthorized copies of individuals’ voices and likenesses. This legislation comes at a critical time, as advances in AI technologies like generative models and deepfakes make it increasingly easy to synthesize believable imitations of real people’s voices.

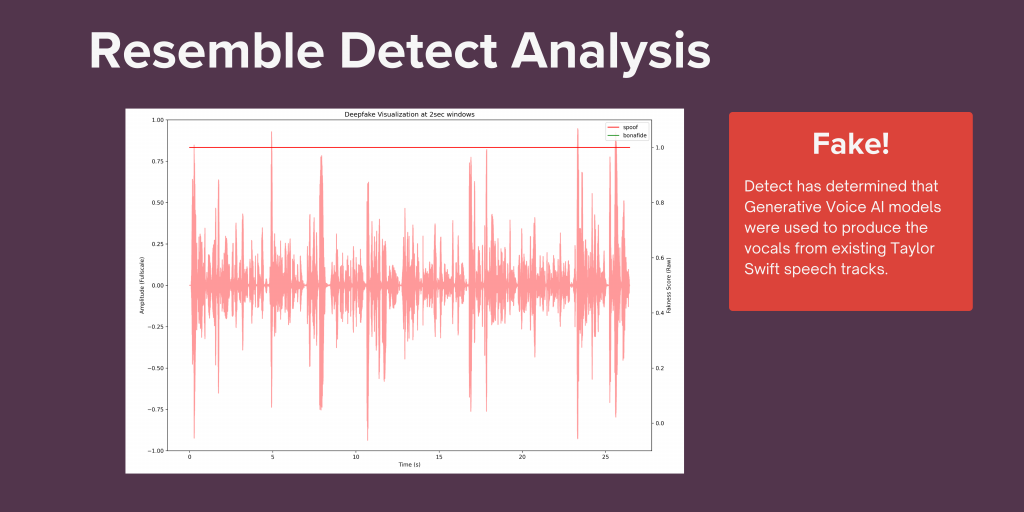

In this environment, tools like Resemble Detect play an essential role in detecting and exposing deepfake audio content. Resemble Detect is an advanced deepfake detection tool developed by Resemble AI that can identify fake audio generated by AI with up to 98% accuracy. Here’s why Detect is so vital for enforcing protections like those outlined in the No AI FRAUD Act:

- Accuracy and Reliability: Detect employs cutting-edge neural networks trained extensively to distinguish subtle differences between real human voices and AI-generated voices. This allows the tool to reliably detect even highly realistic deepfake audio that seeks to imitate a person without their consent. The high accuracy of Detect makes it an ideal technology for identifying violations of the No AI FRAUD Act.

- Real-Time Detection: Detect is designed to analyze audio files and determine their authenticity in real-time. This enables rapid detection of AI-based voice fraud as soon as it surfaces online or in other media. The ability to promptly flag unauthorized synthetic voices is key to enforcing the Act’s protections.

- Seamless Integration: Detect is designed as an accessible API-ready solution that can be smoothly integrated into existing workflows. This seamless integration enables companies and platforms to efficiently incorporate deepfake detection into their content moderation pipelines at scale. Ease of integration is key to widespread enforcement.