In today’s interconnected world, the internet and social media platforms have made content more accessible than ever before. However, this technology comes with its own set of challenges. The proliferation of AI deepfakes has raised significant concerns, including the potential for defamation and scams. In this report, we’ll share the TikTok deepfake of YouTube Influencer MrBeast, provide our deepfake analysis, and explore deepfake detection tools that protect content creators and their audiences.

Lots of people are getting this deepfake scam ad of me… are social media platforms ready to handle the rise of AI deepfakes? This is a serious problem pic.twitter.com/llkhxswQSw

— MrBeast (@MrBeast) October 3, 2023

MrBeast TikTok Ad Full of Deepfake Promises

MrBeast just shared the TikTok video above which features a deepfake of himself promising iPhone 15 Pro’s to 10,000 of his fans at an unbelievably low price. He also encourages them to redeem their phone by clicking on a link. Notably, this deepfake video was run as a digital media ad on TikTok, complete with MrBeast’s logo and a blue checkmark, appearing official. In his official post, the 25-year-old YouTube Influencer warns his fans that the video is an iPhone scam and asks social media platforms whether they’re addressing the problem. Per sources on the internet, a TikTok spokesperson has said the company removed the TikTok ad and took down the associated account. As suggested by MrBeast’s comments, the video highlights the growing concern about deepfake technology.

Potential Impact of Deepfake Scams

With accessibility to AI celebrity voice generators, deepfake Influencer scams like MrBeast’s deepfake video can rapidly circulate on social media platforms. MrBeast has 191 Million subscribers on YouTube alone. With an audience this large, there’s potential for the video to reach a wide audience. While there have yet to be specific reports about those impacted it’s safe to suggest that the following are risk factors related to the spoof video.

- Reputation Risk: MrBeast’s reputation score and credibility were at risk due to the AI fraud associated with his name. Also, if his deepfake content spreads, users may find it difficult to differentiate between real and fake content, losing interest in his programming.

- Potential AI Scam Victims: Individuals who fell for the iPhone scam could have lost money and trust in online giveaways which are an effective engagement tool for content creators.

- Online Security Concerns: The incident raised concerns about the ease with which deepfake technology can be used for scams and defamation. Users could have given up their personal information. There’s also the concern of clicking on a link that downloads malware or covertly downloads software intended to damage the user’s device.

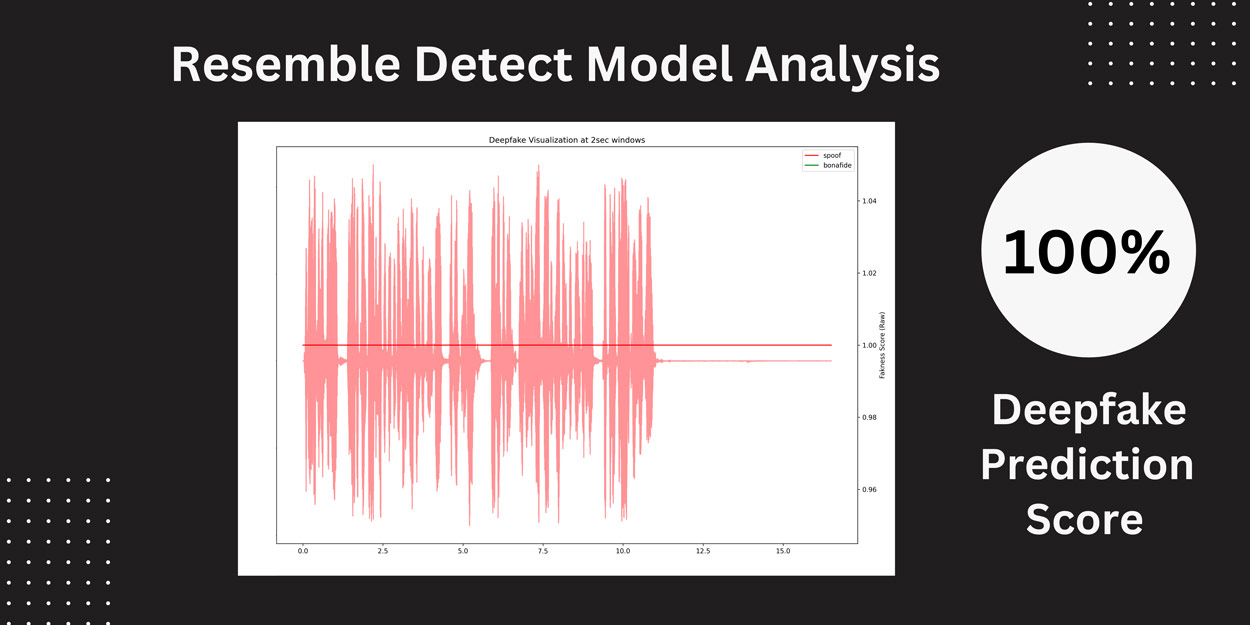

Resemble Detect’s analysis of MrBeast’s Deepfake identified the clip as a deepfake with 100% probability.

Detect Deepfakes With Resemble’s AI Security Stack

Resemble Detect

Resemble Detect is a real-time deepfake detection model that utilizes advanced AI models to identify and distinguish genuine from manipulated audio content. In the context of MrBeast’s incident, the Ai voice detector could have played a crucial role in the following:

- Early AI Fraud Detection: Resemble Detect’s AI voice detector could have identified the deepfake video as soon as it surfaced, preventing it from spreading across the internet. In the chart above, the deep neural model has analyzed 2-second increments of audio data to determine that the video was a deepfake with a 100% prediction score.

- AI Fraud Prevention: Flagging the content as fraudulent, could have acted as a safeguard against potential AI scam victims.

PerTh AI Watermarker

As another content verification measure, Resemble’s PerTh AI Watermarker is designed to protect intellectual property and content authenticity by embedding invisible watermarks into audio content. If MrBeast’s content library was watermarked with PerTh, the audio file could have been analyzed by our team to verify whether the audio was original or not.

Pioneering The Path For AI Safety

The incident involving MrBeast and the iPhone 15 giveaway scam underscores the growing threat of deepfake technology to online identities and security. This report emphasizes the importance of proactive measures such as Resemble AI’s security stack. By utilizing Resemble Detect for early detection and PerTh AI Watermarker for content verification, content creators, and organizations can protect their reputations and content authenticity. Collaboration between responsible AI companies, social media platforms, and content creators is essential to AI safety.