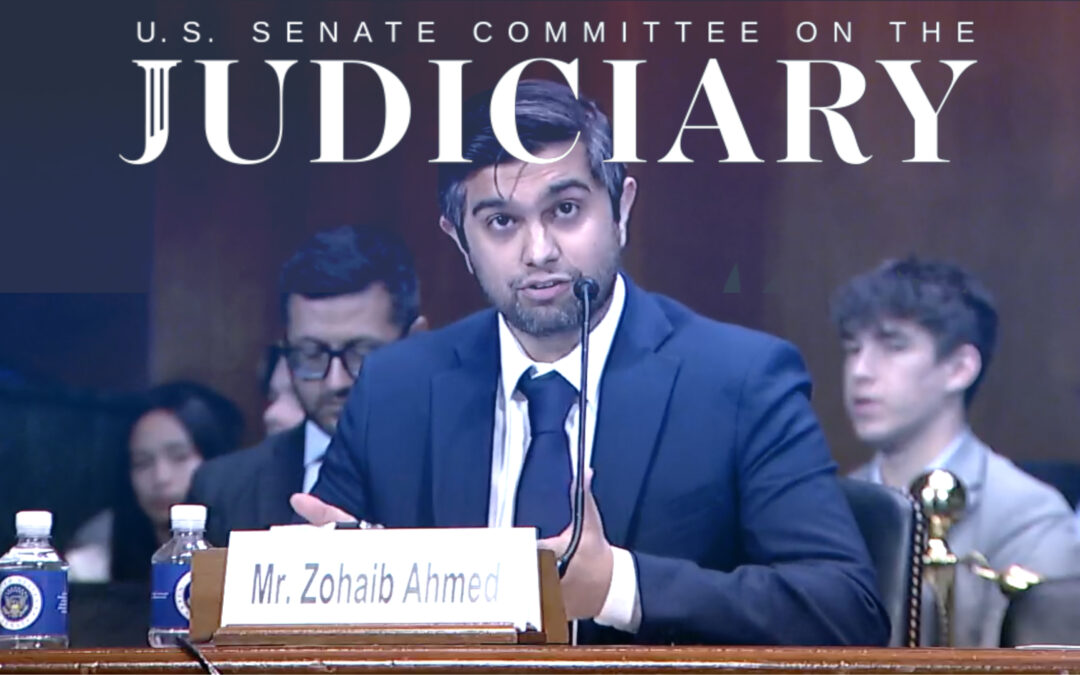

The Rise of Deepfake Deception

The proliferation of deepfake technology has made it increasingly difficult to determine whether audio, images, or video are real or AI-generated. Deepfakes, especially in the form of fake celebrity voices, threaten to undermine trust and spread misinformation. Below are recent deepfake examples of a prominent 20-year old climate change activist, YouTuber, and the First Lady of the United States that have gone viral over social media.

Deepfakes of climate activist Greta Thunberg, YouTuber MrBeast, and the First Lady of America.

The manipulated media has the ability to sway opinion or damage reputation. Additionally, AI misuse impacts regular people at scale who consume this fake content. While deepfake detection has improved, staying ahead of the progress of generative AI requires the evolution of deep learning techniques.

The Challenges of Human Detection

The latest advancements in AI voice synthesis allow for the creation of shockingly realistic fake voices. Tools like a deepfake voice generator enable anyone to produce high-quality deepfake audio mimicking a public figure’s voice. The outputs of these deepfake voice AI models frequently pass as authentic to the human ear. Recent academic research confirms that humans cannot detect over 25% of deepfake speech samples. However, telltale artifacts or manipulations can appear upon closer analysis.

A New Line Of Defense

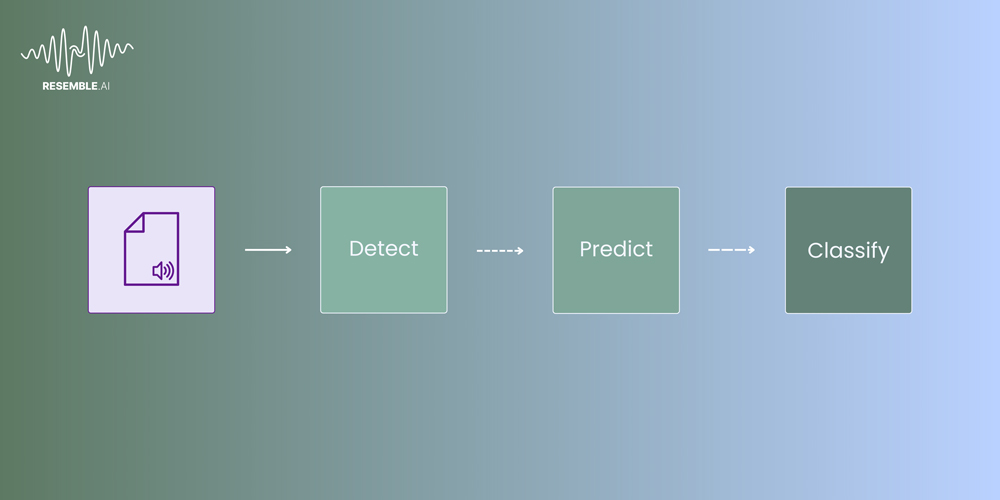

Until this point, there’s been a heavy reliance on multimodal deepfake detection, leveraging artifacts from image, video, and audio domains. However, alarming research reveals that humans overestimate their ability to detect manipulated media. Specifically, audio or speech deepfake content currently pose the most risk. For this reason, our research engineers at Resemble AI have set out to detect deepfake content purely from audio with Resemble Detect. Identifying deepfake audio at 98% accuracy, our robust deepfake detection model is a real-time API-ready solution.

How Is Fake Speech Different?

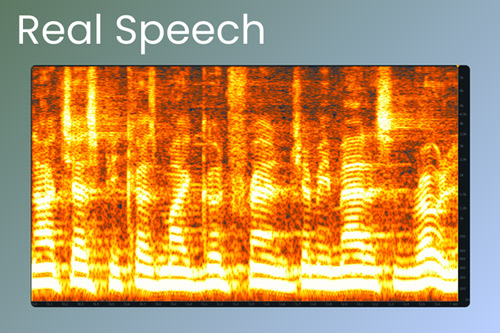

Before we dive into the emerging deep learning techniques that enhance Detect’s ability to identify deepfake audio, let’s explore the most obvious differences between real and fake speech. The representation of audio signals in an audio file varies between real and fake speech. Traditionally text to speech and speech to speech AI models don’t produce audio signals that are as immaculate as a human’s voice. There can be purely signal-level artifacts that can be difficult to hear but nonetheless exist.

Analysis of Joe Biden’s Real vs. Fake Speech

The spectrogram images below correspond to the audio samples beneath them. The audio are from two equal length, excerpts of Joe Biden speaking. Both clips were obtained from YouTube for demonstration purposes. Each has been processed to have an equal loudness of -23 dB LUFS, and has been sampled at a rate of 16kHz. The processing is applied to rule out differences in loudness or the codecs applied by YouTube.

Real Speech – Joe Biden

A human recording is typically full of rich audio signals.

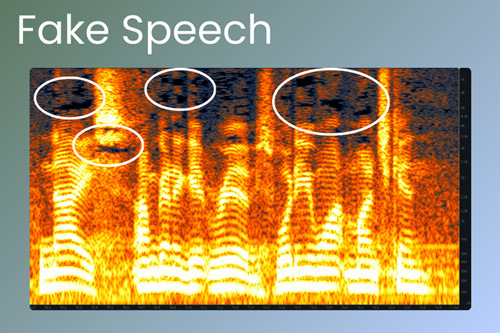

Fake Speech – Joe Biden

The clip has visible patches and is operating on a lower frequency.

Under scrutinous examination, the spectrogram of the real speech shows a dynamic, smooth, and rich recording. Conversely, the fake speech clearly has visible patches, which are typically indications of manipulated audio. It is important to note that these patches can also originate from audio compression codecs like MP3 or AAC. We’ll touch on this phenomenon shortly.

Mel Spectrograms and Their Limitations

The most obvious way to detect these artifacts would be to find audio signal representations that can capture the artifacts. In earlier deepfake technology iterations it may have been possible to identify these artifacts when visualizing the audio’s spectrogram representations. At times, these artifacts would inconsistently show up as patches or smears of noise in the audio signal’s spectrogram.

The audio representation that generative audio models often depend on is the Mel Frequency Spectrogram. This looks at the frequencies in the audio signal and transforms them into a representation that resembles what the human ear hears.

However, recent studies have shown that the Mel Spectrogram isn’t a very robust representation to detect today’s deepfakes. The AI models that generate fakes are technically “statistical approximations”, i.e. at best they approximate a person’s voice by capturing common features amongst large recordings of various people. They are not likely to be perfect clones.

Leveraging Deep Learning for Accurate Detection

Since we can’t necessarily rely on human-devised representations like the Mel-Frequency Spectrogram, we’ve opted out of deploying them at all. Instead, we pit machine against machine. In our efforts to tackle the deepfake dilemma, we use Deep Learning to learn a completely different representation of the audio signal. This representation is obtained by programming Resemble Detect’s model to objectively learn what is real and what is fake.

In other words, the AI model is forced to transform the audio signal into a machine-learned representation of the audio. This representation is learned by the model after training on several hours of real and fake audio samples helping Detect make a high probability prediction on whether audio is real or fake. The representation is not interpretable by humans. Therefore one could not draw conclusions about the source of the model’s decision when assessing the validity of the audio.

The Rise of Deepfake Deception

The proliferation of deepfake technology has made it increasingly difficult to determine whether audio, images, or video are real or AI-generated. Deepfakes, especially in the form of fake celebrity voices, threaten to undermine trust and spread misinformation. Below are recent deepfake examples of a prominent 20-year old climate change activist, YouTuber, and the First Lady of the United States that have gone viral over social media.

Navigating Challenges With Emerging ML Techniques

In general, a raw audio recording typically leads to a larger file size. Streaming services like YouTube, TikTok, etc. apply audio codecs to reduce the payload size transmitted over a network. How these audio codecs work is by subtracting information in the audio signal that is redundant to the human ear. By doing so, the file size is reduced without being noticeable to the listener. These codecs can also leave patches and artifacts in the audio, like the patches seen in the fake Joe Biden audio clip above. There is the potential of impacting the deepfake detection model’s ability to decipher whether the signal is real or fake.

Furthermore, fake content that finds its way on social media typically has music in the background. Through extensive experimentation, our research team has noticed that deepfake detectors can become confused by the additional sound-related element. Moreover, they have discovered that detection accuracy improves by running such content through a source separation model. The speech stem extracted from the source separation step can then be passed on to the AI voice detector.

Fingerprinting

Deepfake detectors are trained on many hours of fabricated audio data that were generated using many different deepfake methods. In light of the rapid progression of generative AI, new models or architectures are likely entering the space. Without the necessary training on these new variations of deepfake technology, an AI voice detector becomes susceptible to inaccurately classifying audio data. To tackle this, our engineers leverage another deep learning representation. Resemble Detect learns to keep track of various real audio from a long list of speakers during the training phase. The AI model records this information and saves it as an ‘audio fingerprint’ for each speaker. The model then generates a similar representation for a new unseen clip and compares it with the fingerprints of the nearest speaker, allowing it to discard fakes by a process of elimination.

Classification

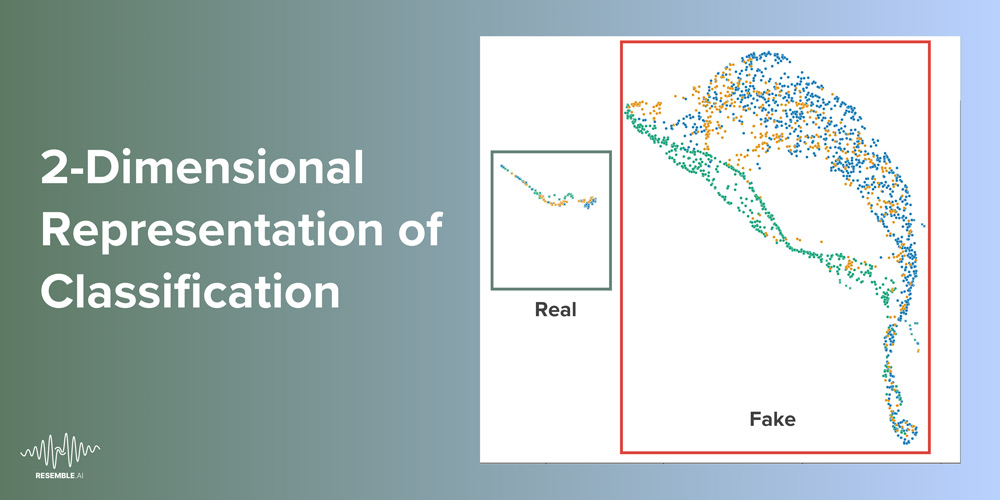

The image below represents a massive test of audio clips that were not seen during this test model’s training phase. The team already knew which clips were real and fake, but were keen on seeing how the model classified each. The fake audio clips are designated by ‘o’ and the real audio clips with an ‘x’. The image reveals how all the real clips form a cluster in the model’s learned representation on the left-hand side. Opposite to the real audio is the fake audio clips that form a swarm on the right, indicated by the ‘o’.

A visual representation of the test model’s organization of classified real and fake audio clips.

We should note that there are some rogue ‘x’s in the swarm to the right, which ideally should only consist of fake clips. However, these rogue ‘x’s may or may not be false classifications. This can’t be known for sure because this 2-D image is trying to visualize the model’s representation, which in reality is around 300 dimensions. Therefore the human eye can not determine the dimensional space between the individual audio clips. For the sake of visualization, we use a UMAP dimension reduction algorithm to project the large dimension space into a conceivable 2 dimension visual.

Also, the team is incorporating confidence into the model’s prediction. A model is able to speak for its own uncertainty on the validity of an audio clip, thereby allowing us to take less-confident predictions by the model less seriously.

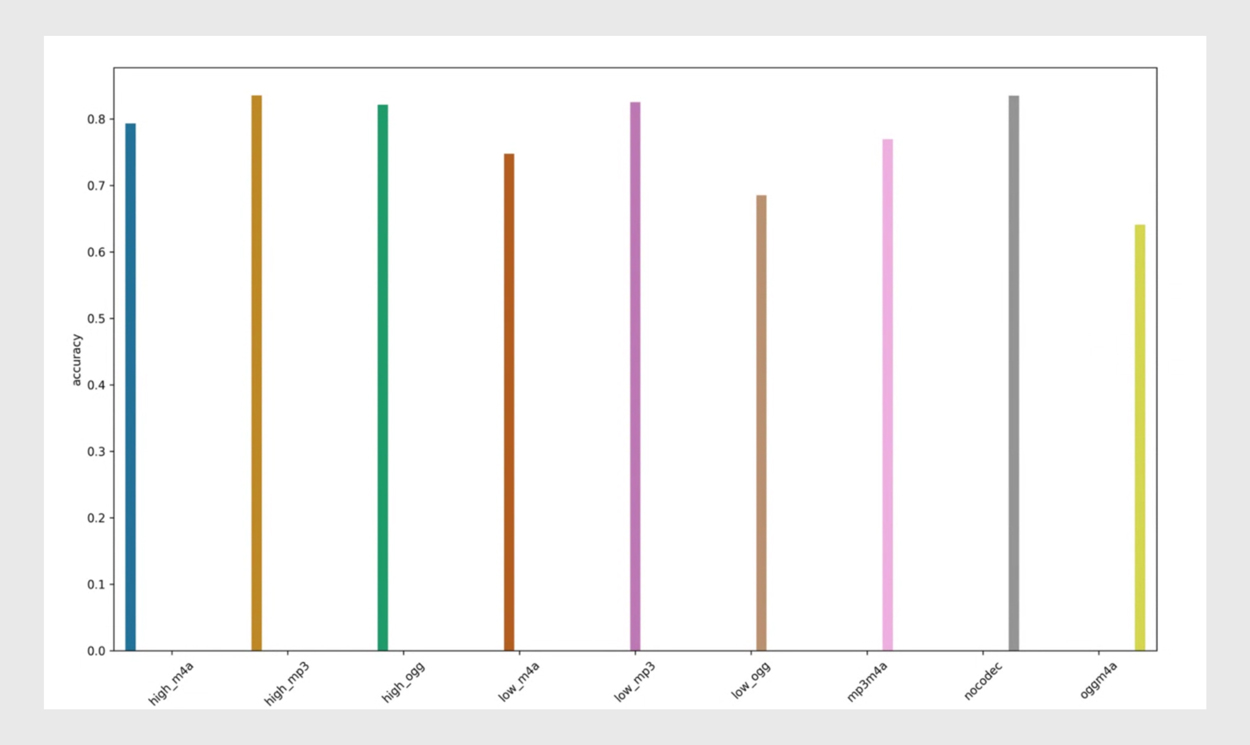

Codecs

Earlier in the article, we mentioned that codecs can leave their own artifacts in the audio, causing encoded clips that were using some codec to be misclassified as real or fake. Below is an example of how prediction scores vary depending on the model’s training data. This sample model wasn’t trained on any codecs except MP3 clips in the training dataset. We can see how the accuracy for detecting that a clip is bonafide falls from 80%+ for clips that had no codecs applied or just high/low-bitrate MP3 encodings, to less than 70% if the clip when clips are encoded with some kind of OGG/Vorbis encoding. This shows how a popularly used codec like OGG can make it hard for the model to know if the data is real or fake. In order to achieve 98% deepfake detection accuracy, Detect trains with codecs during the training stage. The codecs are applied to some batches of audio at random, regardless of whether the clip is real or fake. This is to help the internal representation learned by the model to learn to be more robust against codec-like conditions.

The chart reflects a test model’s accuracy against varying codecs after being trained solely on a small amount of MP3 data.

Future-Proofing The Auditory Landscape With Resemble Detect

As we venture further along in the AI era, the challenges surrounding deepfake deception increase, particularly in the domain of audio manipulation. Our ever-advancing technologies are a double-edged sword; they both facilitate and combat deception. However, with Resemble Detect, we have constructed an advanced and real-time API-ready solution that achieves a staggering 98% accuracy in identifying fake audio. This model transcends traditional methodologies, leveraging state-of-the-art deep learning techniques that “fight fire with fire,” so to speak—using AI to counteract the cunning capabilities of deepfakes.

Our foray into machine-learned audio representations and adaptive strategies to mitigate confounding factors such as codecs and background music places us on the cutting edge of this field. We continually adapt to the pace of innovation, understanding that in a rapidly evolving digital landscape, a passive stance is not an option. Moreover, our commitment to research and development ensures that our systems are poised to tackle the emerging variants of generative AI, thus offering a robust and future-proof line of defense for enterprises and individual users. As we advance, we aim to further refine our models, thereby contributing to a more secure and trustworthy auditory landscape.

To learn more about Resemble Detect, please click the button below to schedule time with a Resemble team member.