Fake news creators on YouTube have been exploiting artificial intelligence (AI) and automated tools to generate and disseminate misinformation targeting Black celebrities, creating a significant challenge in the digital information landscape. This trend of deepfake black celebrities has been particularly noticeable in videos that mix AI-generated content with manipulated media, aiming to spread false narratives about well-known figures such as Sean “Diddy” Combs, T.D. Jakes, Steve Harvey, and others. These videos often remix actual news events with fraudulent information, misleading viewers and rapidly gaining popularity due to their sensational nature.

The impact of these deepfake black celebrities is multifaceted. It not only tarnishes the reputation of the targeted celebrities but also contributes to a broader climate of distrust and misinformation online. This is especially concerning given the proximity to the 2024 elections, raising fears about the potential for disinformation efforts to influence electoral outcomes and exacerbate social divisions. The situation is further complicated by the fact that YouTube, despite announcing plans to enforce new policies requiring labels for synthetic and manipulated content, has struggled to effectively curb the spread of such fake news videos.

The exploitation of AI for creating and spreading fake news about Black celebrities reflects a broader issue of racial inequities in the digital space. Misinformation generated by AI is becoming increasingly prevalent and sophisticated, making it harder to detect and counter. This trend disproportionately affects racial groups whose health and social conditions are already vulnerable, including Black and Hispanic populations, by engaging them with specifically crafted, misleading content. The rise of AI-generated fake content targeting Black celebrities and voters underscores the urgent need for effective interventions and strategies to combat digital misinformation and protect vulnerable communities.

Civil rights leaders and experts have warned about the potential of AI to deepen racial and economic inequities, as well as to erode democracy by enabling more sophisticated disinformation campaigns. The challenge lies in developing and implementing measures that can effectively identify and mitigate AI-generated misinformation without infringing on freedom of expression or exacerbating existing social inequalities.

What are examples of Black Celebrities being targeted by AI Generated Deepfakes?

The exploitation of AI and automated tools to generate and disseminate misinformation targeting Black celebrities on YouTube has been documented through various examples. These AI-generated videos often mix actual news events with manipulated media and fraudulent information, misleading viewers and rapidly gaining popularity due to their sensational nature. Here are some specific examples and details:

- T.D. Jakes and Sean “Diddy” Combs: An attorney for megachurch pastor T.D. Jakes mentioned that Jakes is just the latest prominent figure targeted by fake and false information on YouTube. Several videos have implicated Jakes in rape and sex trafficking allegations against Sean “Diddy” Combs without any evidence. These videos use AI-generated content to create false narratives, garnering interest worldwide.

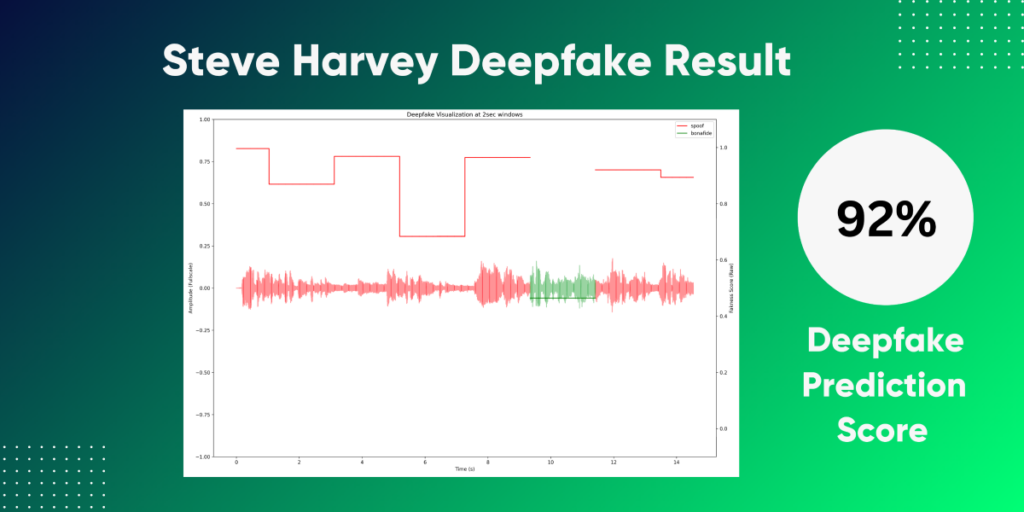

- Steve Harvey: There have been fake news allegations about Marjorie Harvey cheating on Steve Harvey with his bodyguard. Steve Harvey addressed these fake news allegations in a YouTube video, highlighting the issue of misinformation targeting him.

- General Impact on Black Celebrities: The trend of targeting Black celebrities with AI-generated misinformation is not limited to a few individuals but is a broader issue affecting many well-known figures. This includes rapper and record executive Sean “Diddy” Combs, TV host Steve Harvey, actor Denzel Washington, and Bishop T.D. Jakes, among others. Many of the video titles and voiceovers have pushed fake narratives stemming from recent high-profile lawsuits and other sensational news events.

These examples underscore the significant challenge posed by AI-generated misinformation in the digital information landscape, particularly targeting Black celebrities. The impact of this trend is multifaceted, tarnishing the reputation of the targeted individuals and contributing to a broader climate of distrust and misinformation online.

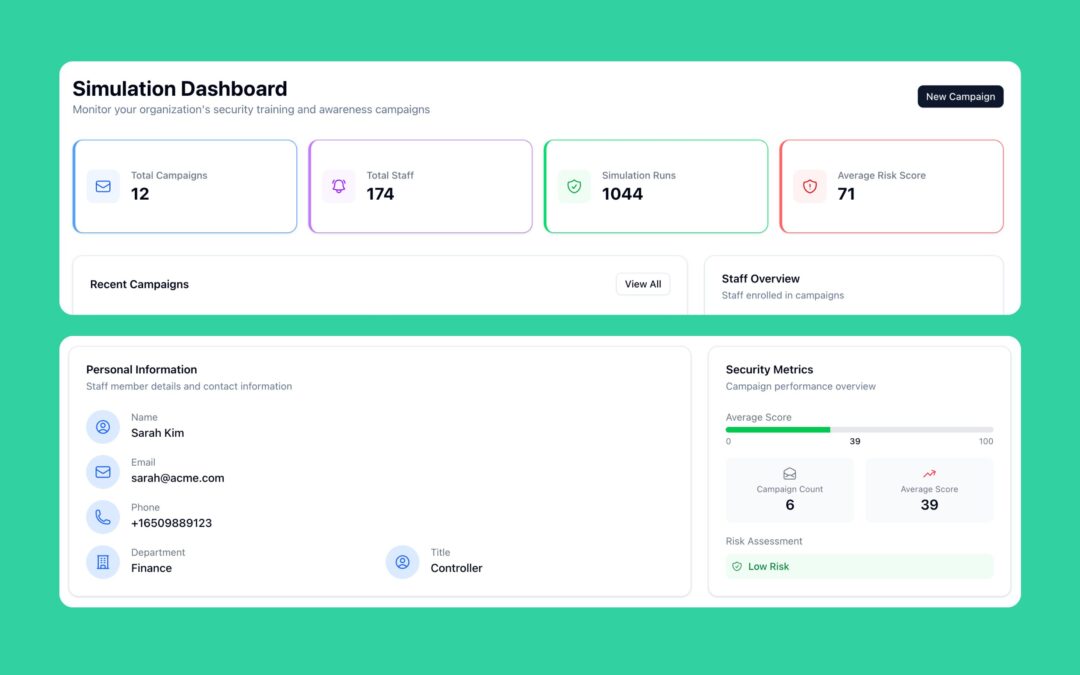

Investigation of Deepfakes with Resemble Detect

Resemble AI offers a suite of tools that can help combat the spread of AI-generated misinformation, such as the kind targeting Black celebrities on YouTube.

Resemble Detect is a sophisticated deep neural network trained to distinguish real audio from AI-generated audio. It analyzes audio frame-by-frame, ensuring any amount of inserted or altered audio can be accurately detected. This tool could be used to identify and flag videos that use AI-generated voices to spread false narratives about celebrities.