The Scarlett Johansson-Open AI Sky AI voice controversy erupted when OpenAI unveiled its new AI assistant with voice capabilities, featuring a voice called “Sky” that many users found eerily similar to Johansson’s voice from the movie “Her.”

Background

In May 2024, OpenAI introduced GPT-4o, an advanced language model with audio capabilities, allowing users to converse with the AI assistant using voice commands. One of the five available voices was named “Sky,” which drew comparisons to Scarlett Johansson’s voice from the 2013 film “Her,” where she voiced an AI assistant.

Johansson’s Allegations

Johansson released a statement claiming that OpenAI CEO Sam Altman had approached her in September 2023, offering to hire her to voice the ChatGPT system, which she declined. She expressed shock and anger upon hearing the “Sky” voice, stating it sounded uncannily like her own, to the point where her friends and news outlets couldn’t tell the difference.Johansson alleged that Altman had “insinuated that the similarity was intentional” by tweeting a reference to “Her” during the GPT-4o launch.

OpenAI’s Response

OpenAI initially denied any intentional imitation of Johansson’s voice, stating that “Sky” belonged to a different professional actress using her natural speaking voice. However, they agreed to pause the use of the “Sky” voice “out of respect for Ms. Johansson.” In a blog post, OpenAI explained their process of selecting voices based on criteria like timelessness, approachability, and trustworthiness, without deliberately mimicking celebrities.

Technical Analysis

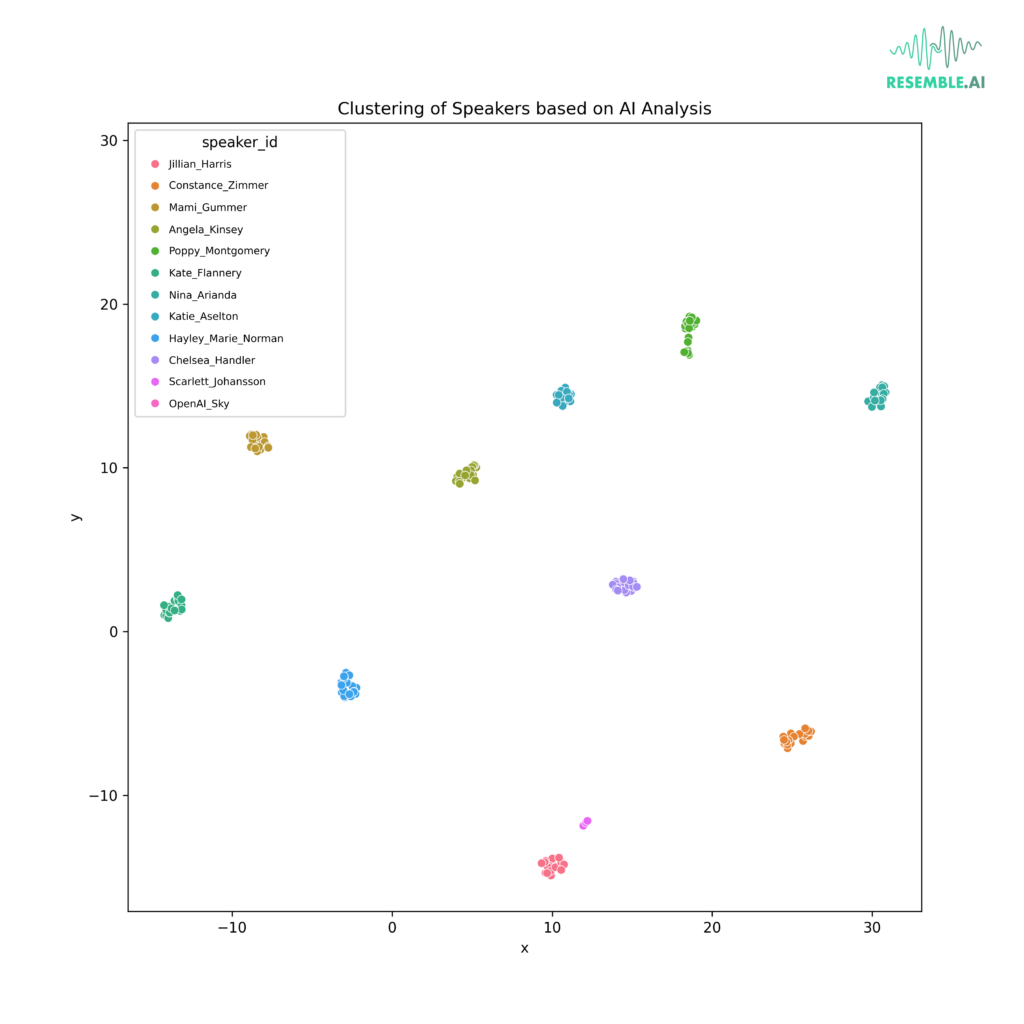

To address these concerns, we leveraged our proprietary speaker identification model. Using Resemblyzer, an open-source Python package we developed, we conducted a detailed analysis. Resemblyzer derives a high-level representation of a voice through a deep learning model known as the voice encoder. This model creates a summary vector of 512 values (embedding) that encapsulates the unique characteristics of a voice.

Our analysis involved plotting the voice embeddings of several speakers, including the disputed Sky voice and Scarlett Johansson’s voice. The resulting clustering, illustrated below, shows the distinct yet closely related nature of these voices.

In the plot:

- Scarlett Johansson’s voice is represented by the pink cluster.

- The Sky voice, labeled “OpenAI_Sky,” is depicted in red.

While the embeddings indicate a high similarity, our model confirms that the Sky voice, although close to Scarlett Johansson’s voice, is still distinguishable. This distinction suggests that while the voices are similar, they are not identical and thus not a direct clone.

Moving Forward

Resemble AI remains committed to ethical standards and transparency in AI voice synthesis. We will continue to refine our models and contribute to open-source tools like Resemblyzer to foster trust and innovation in the industry.

At Resemble AI, we have developed cutting-edge solutions to enhance AI security and protect our customers’ content libraries. Our Neural Speech AI Watermarker, PerTh, embeds an inaudible watermark into audio files to ensure the traceability and integrity of the content. This technology safeguards against copyright infringement and deepfake AI voice manipulation by embedding the watermark in an imperceivable and persistent manner.

PerTh has been enhanced to remain detectable even after the audio has been processed by other speech synthesis models. This capability allows us to track and verify the origin of audio files, ensuring that any unauthorized use or tampering can be efficiently detected.

Additionally, we have introduced “Detect,” a state-of-the-art deepfake detection tool designed to identify fake audio with up to 98% accuracy. Detect utilizes a sophisticated neural network to analyze audio data, distinguishing between real and fake content. This tool provides real-time detection, ensuring that our customers can promptly identify and address any instances of AI voice fraud.

By integrating PerTh and Detect, we offer a robust AI security solution that not only protects against deepfake audio but also ensures the ethical use of AI-generated content. Our commitment to advancing AI safety and reliability continues to drive our innovation, providing our customers with the assurance that their voice data remains secure and authentic.