As generative AI has grown in popularity in recent months, so have concerns about the ethical use of AI. Fortunately, ethical standards have been baked into Resemble AI’s process from the very beginning and we continue to improve upon what’s already in place.

Ethical By Design

Ethics and morals are at the core of Resemble AI. Our platform is designed to ensure that only the intended speaker can clone their own voice, and we enforce strict usage guidelines to prevent malicious use of our technology. When working with companies on customized solutions, we follow a rigorous process to ensure proper consent and legal usage of voice data.

Holding Ourselves To A Higher Standard

While we’re working with the private and public sectors to use generative AI for good, we also have safeguards in place to maintain our ethical standards, including:

- a dedicated team that is responsible for ensuring that our voice cloning technology is used ethically and responsibly

- policies and guidelines that we require our customers to adhere to, including a consent line that must be recording the same voice that is being used to generate new audio

- policies and guidelines are designed to ensure that our technology is used in a way that is respectful of people’s privacy and data rights and that it is not used in a way that might cause harm

Staying On The Offensive

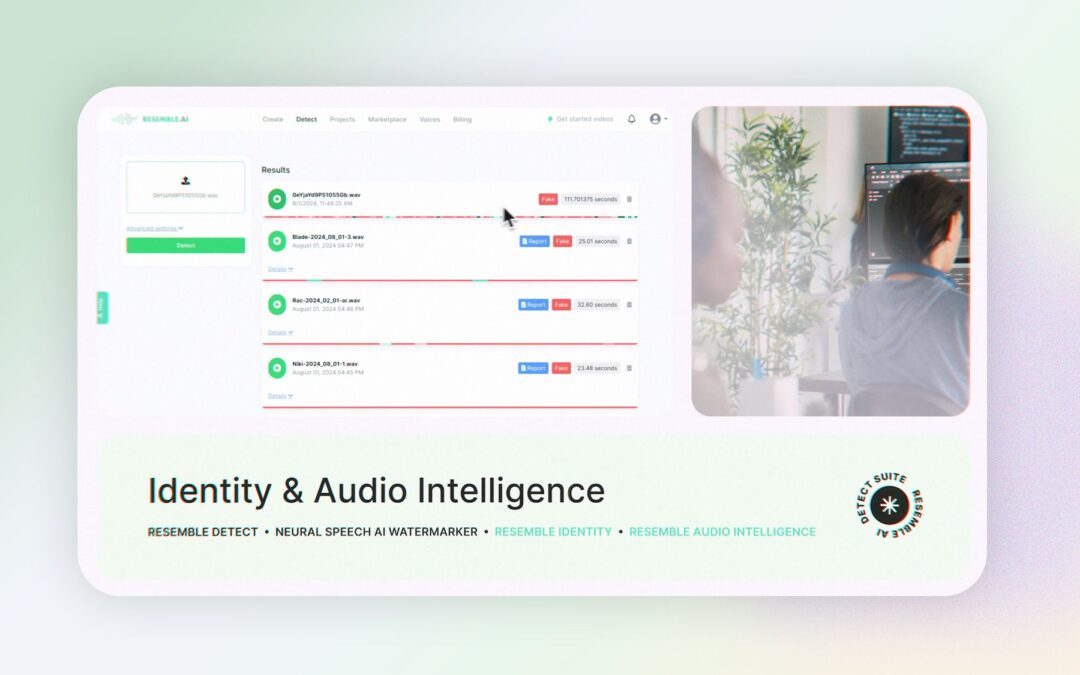

However, we know that bad actors do exist. That’s why we created open source fake speech detection with Rezemblyzer several years ago, and then recently took it a step further by introducing the Neural Speech Watermarker, an “invisible watermark” that tackles the malicious use of AI generated voices. With a deep neural network watermarker, the data is embedded in an imperceptible and difficult-to-detect way, acting as an “invisible watermark.”

If you have any questions about any of our policies, please feel free to contact our support team.