Today, Resemble AI is excited to introduce a groundbreaking approach to cybersecurity: a voice-based deepfake simulation platform designed to help organizations test and harden their defenses against AI-driven social engineering. Early adopters have already reported up to a 90% reduction in successful attacks.

While most security awareness training relies on static videos and generic phishing emails, Resemble AI’s platform brings simulations to life with hyper-realistic voice cloning and adaptive conversations. Think of it as red-teaming, but with AI-powered impersonation attempts sent straight to your employees — via phone calls, voicemails, and even WhatsApp messages.

These aren’t scripted prompts. Our platform uses agentic AI that maintains context and handles objections in real-time, powered by Resemble’s proprietary voice models and LLM integrations. From a spoofed CFO voicemail asking for wire transfers to a simulated customer demanding account access, employees experience real-world pressure, with none of the real-world fallout.

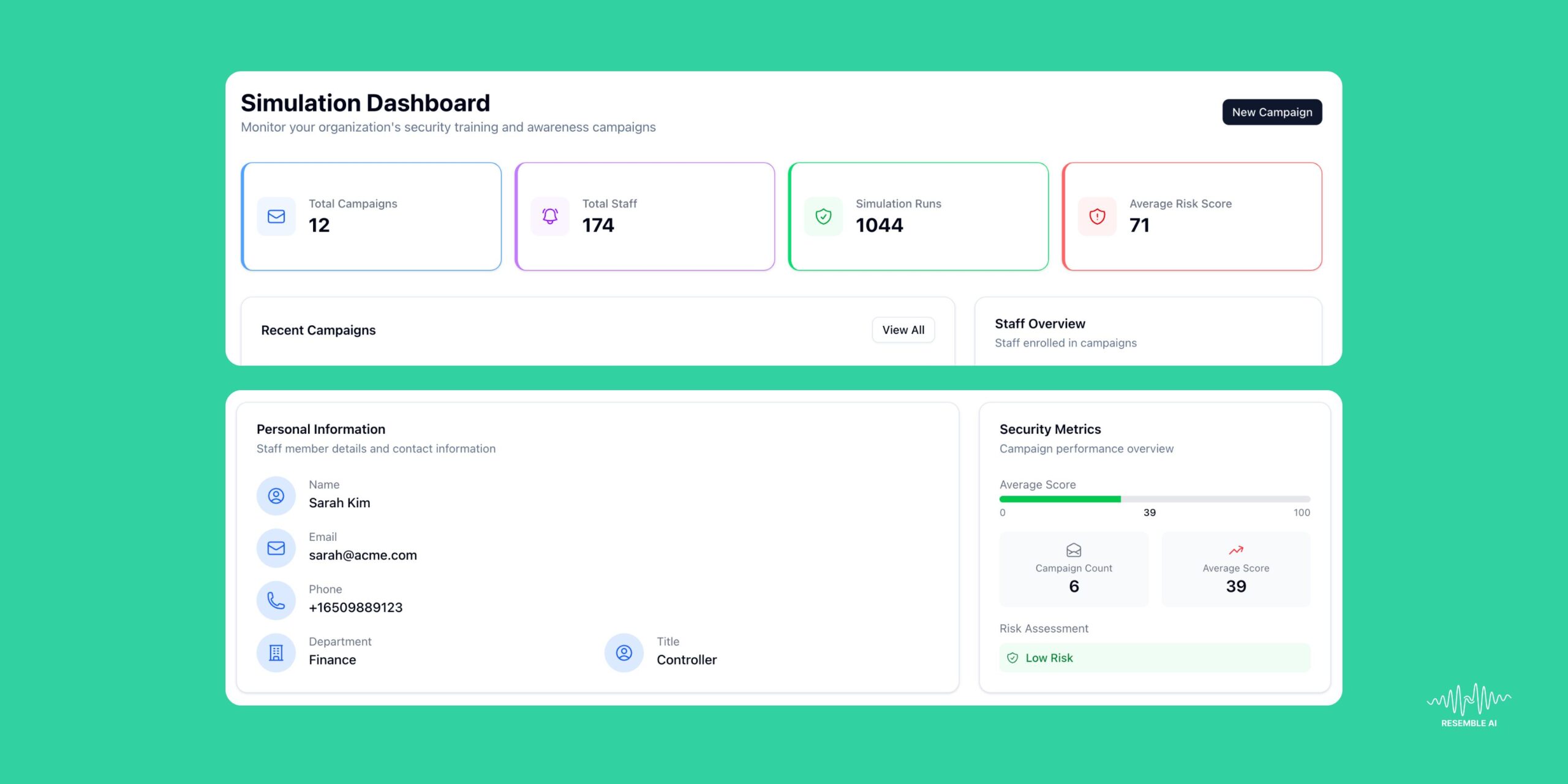

Every simulation generates a risk score from 0 to 100, personalized at the individual, team, and department level. Security teams can instantly identify blind spots and high-risk behavior. Whether it’s someone clicking a suspicious link or revealing sensitive info to a fake executive. The result? Targeted training where it’s actually needed, not across-the-board compliance theater.

This simulation platform is already in limited release, piloted by leaders in finance, healthcare, and customer service — sectors where deepfake-based fraud is both prevalent and devastating. In industries where frontline workers answer every call and make real-time decisions, deepfake protection can’t be theoretical. It has to be tested.

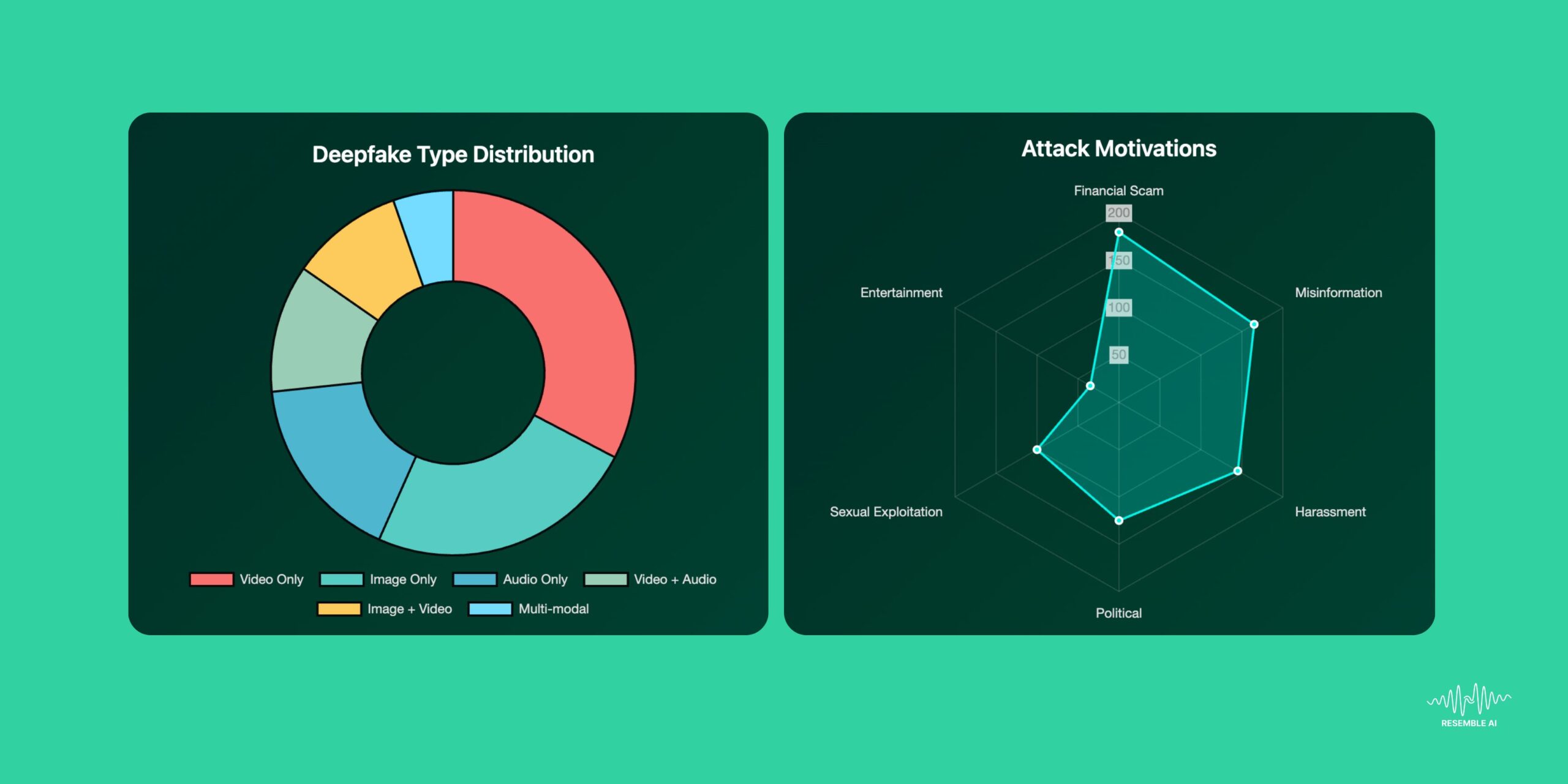

According to Resemble AI’s deepfake incident tracker, voice-based fraud has already caused over $2.6 billion in damages globally. Our new platform allows companies to take a proactive approach, actively simulating how those attacks would unfold—and giving them a chance to intervene before real money, data, or trust is lost. “Today’s attackers aren’t playing by the old rules,” said Zohaib Ahmed, CEO and Co-founder of Resemble AI. “They’re using cloned voices, social cues, and urgency to manipulate in real time. We built this platform so companies can experience what that looks like inside their own walls—and build muscle memory before it counts.” A Shift From Passive to Active Defense Legacy tools like KnowBe4 and Cofense focus on passive learning. Resemble AI’s approach is active, adaptive, and voice-first. We don’t just test your weakest link, we help you reinforce it. Recent deepfake scams, like the one targeting Accenture’s CEO in May, show how voice impersonation is becoming the go-to vector for attackers. Whether it’s a fake vendor, a spoofed HR exec, or a “friendly” internal request, these attacks often sound legitimate and slip past traditional training methods. Our simulations are designed to mimic that reality. Built Ethically. Deployed Securely. Resemble AI leads with ethical guardrails and secure design. Our platform integrates:- Resemble Detect for deepfake detection

- Resemblyzer for speaker verification

- Resemble Watermark for forensic watermarking